Background

Often times I find myself trying to troubleshoot load balanced systems as an SRE and, depending on the situation, this can be quite challenging. Whether your situation is trying to figure out which backend server in a load balanced pool is throwing errors, or even which data center/cloud region might be causing problems, it is not always straightforward how you can reliably send requests and track response status codes. I found myself opening multiple terminal windows and writing elaborate curl scripts to accomplish this. While this is a perfectly valid approach, I decided to make a tool and make this easier for some common troubleshooting scenarios.

This year, my goal was to create an open source tool and push myself to actually formalize the process. I wanted to learn golang due to the language’s popularity in the cloud native world, and with the ease of goroutines, it fit my need well to make concurrent HTTP requests.

Scenarios

The following are some common scenarios I run into, and how this tool can help.

Multiple load balanced servers

The following is a standard load balanced setup example. When a request is sent to

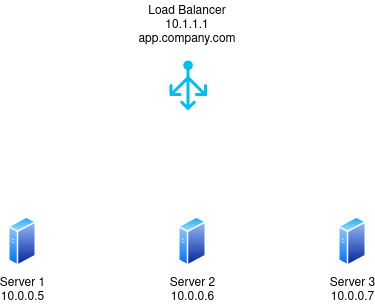

The following is a standard load balanced setup example. When a request is sent to app.company.com, DNS points the request at the load balancer (10.1.1.1). A standard load balancing algorithm is called round robin, which will forward the requests to the backend servers in the following way:

- Request #1: Server 1 (10.0.0.5)

- Request #2: Server 2 (10.0.0.6)

- Request #3: Server 3 (10.0.0.7)

- Request #4: Server 1 (10.0.0.5)

- Request #5: Server 2 (10.0.0.6)

etc.

While this does help balance request load across backend servers, it may make it hard to tell if Server 2 is having issues because you will only see the issue manifest every third request. This gets even harder to troubleshoot if the issue is intermittent because then you may not have a reliable way to test. In order to check each server individually, we would need to send requests directly to them. This could be as simple as sending an HTTP request to the IP address - something like this:

curl -v http://10.0.0.5/

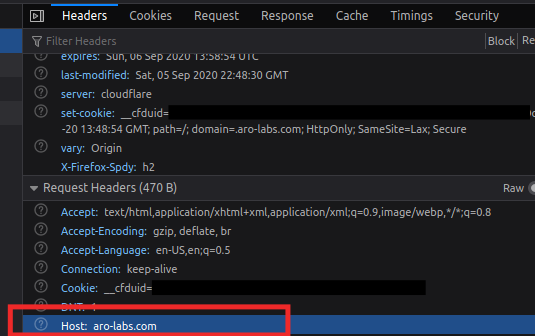

In your scenario, it may be as simple as this. However, many times web servers host multiple applications on a single server. If you just send an HTTP request to the IP address like the curl example above, how does the web server know which web application to forward the request to? Should it go to app.company.com, or app2.company.com? Most of the time, the answer to this question is using virtual hosting. When you send an HTTP request to a website, you are also including a “Host” header of the DNS name being requested. This can be seen using your browser’s dev tools. Here is the Host header for this blog:

If you send the request to an IP address directly, you won’t have the appropriate Host header and your request will not get forwarded to the correct application. Virtual hosting allows multiple websites to be hosted on a single IP address. This is important because it is not very easy to coordinate multiple applications being hosted on different IP addresses, and in the case of public IPv4 addresses, this can be expensive.

If you send the request to an IP address directly, you won’t have the appropriate Host header and your request will not get forwarded to the correct application. Virtual hosting allows multiple websites to be hosted on a single IP address. This is important because it is not very easy to coordinate multiple applications being hosted on different IP addresses, and in the case of public IPv4 addresses, this can be expensive.

Actually sending a request directly to a backend server presents a challenge because in the original scenario, DNS for app.company.com would resolve to the load balancer at 10.1.1.1. Assuming that these web servers host multiple websites with virtual hosting, we need to preserve the Host header in order for our request to hit the correct application. One way to make this request directly to Server 1 for example could look like the following:

curl -v http://app.company.com --resolve app.company.com:80:10.0.0.5

This command is basically sending an HTTP request to app.company.com, but instead of letting the regular DNS resolution process happen, we are telling curl that on port 80, app.company.com is at 10.0.0.5. This request should work for us because the request is still going to app.company.com, so the Host header is added to the request and we bypass the load balancer.

Multiple data centers / regions

Another common scenario I find myself having to troubleshoot is similar to the first scenario, but at a regional redundancy / multiple data center level. In the image above, we see two data centers that both host our app at

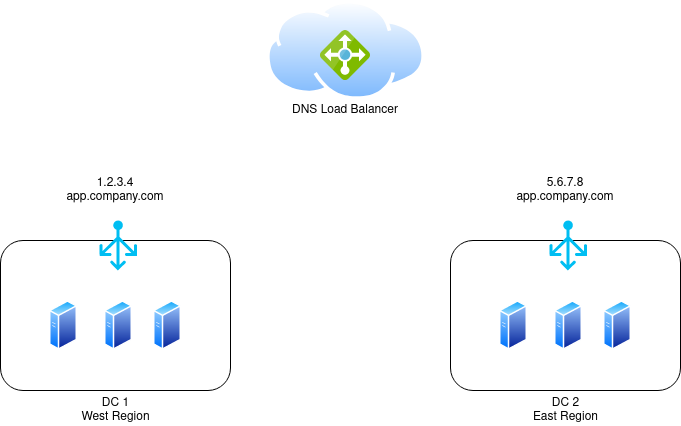

Another common scenario I find myself having to troubleshoot is similar to the first scenario, but at a regional redundancy / multiple data center level. In the image above, we see two data centers that both host our app at app.company.com. In HA (highly available) environments, it is common to see applications being load balanced at a DNS level. This is sometimes called traffic management or global load balancing. These DNS load balancers will often times send requests to a particular destination based on some criteria such as:

- Geo location - If you’re in the west, you’d have a higher likelihood of having a better experience by going to

1.2.3.4since that data center is closer to you. In the same way, someone in the east would likely get sent to5.6.7.8. - Performance - Maybe the east just got new servers, or has a larger internet pipe. Requests might get sent to

5.6.7.8because the DNS load balancer thinks you’ll get a faster response. - Weight - Since the east has the newest servers in this hypothetical scenario, maybe we want 75% of the traffic to go there, and 25% to go to the west.

These are a few examples, but hopefully you can see how it might be difficult to force traffic to a particular data center in a troubleshooting scenario. Similarly to the example in the first scenario, we could make requests directly to the individual data centers with curl using something like:

West:

curl -v http://app.company.com --resolve app.company.com:80:1.2.3.4

East:

curl -v http://app.company.com --resolve app.company.com:80:5.6.7.8

Another approach

As I mentioned at the beginning of this blog, I would end up with something like this (hoping to see an intermittent issue):

while true; do curl -v http://app.company.com --resolve app.company.com:80:10.0.0.5 && sleep 1; done

There is absolutely nothing wrong with this, but if you are trying to do something similar to multiple back end servers, this can get cumbersome quickly. This is why I decided to make Sharkie and make this sort of troubleshooting easier. Specifying multiple back end servers in a single command looks something like this:

sharkie -u http://example.com -s 10.1.1.1 -s 10.1.1.2 -s 10.1.1.3 -e 200

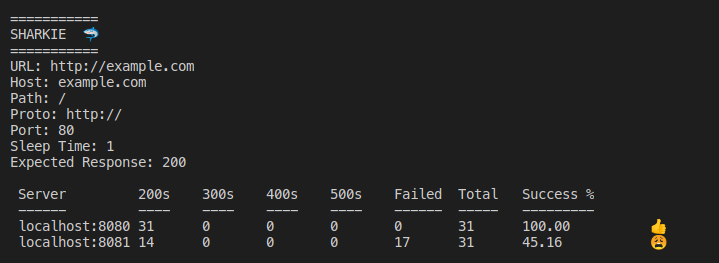

The host header for example.com is added to the requests sent to all three servers specified and actually tracks the response codes and failures to all servers while sending asynchronous requests. An example output (using localhost) looks like the following:

Final thoughts

In the end, there are multiple ways to troubleshoot scenarios like the ones mentioned, but hopefully this post brings to light some of the challenges involved with troubleshooting HA / load balanced HTTP services. I have a lot of ideas for this project and am excited to keep developing on it to cover more use cases. If you have ideas of how the tool can be improved, or even want to submit a PR I am very open to it! In addition I would welcome any advice on improving my noobish golang!